Now that we have studied general test writing strategies, ideas, and tips, it is time to pull our focus inward to the details of the questions themselves.

In general, question types fall into two categories:

- Objective

- Subjective

I needed specific definitions for these, which I found here.

Source: http://www.k-state.edu/ksde/alp/resources/Handout-Module6.pdf

1. Objective, which require students to select the correct response from several alternatives or to supply a word or short phrase to answer a question or complete a statement.

Examples: multiple choice, true-false, matching, completion

2. Subjective or essay, which permit the student to organize and present an original answer

Examples: short-answer essay, extended-response essay, problem solving, performance test items

This source also suggests guidelines for choosing between them:

Essay tests are appropriate when:

- The group to be tested is small and the test is not to be reused

- You wish to encourage and reward the development of student skill in writing

- You are more interested in exploring student attitudes than in measuring his/her achievement

Objective tests are appropriate when:

- The group to be tested is large and the test may be reused.

- Highly reliable scores must be obtained as efficiently as possible.

- Impartiality of evaluation, fairness, and free from possible test scoring influences are essential.

Either essay or objective tests can be used to:

- Measure almost any important educational achievement a written test can measure

- Test understanding and ability to apply principles.

- Test ability to think critically.

- Test ability to solve problems.

And it continues with this bit of advice:

The matching of learning objective expectations with certain item types provides a high degree of test validity: testing what is supposed to be tested.

- Demonstrate or show: performance test items

- Explain or describe: essay test items

I wanted to see what different sources would say, so I also found this one.

Source: http://www.helpteaching.com/about/how_to_write_good_test_questions/

If you want the student to compare and contrast an issue taught during a history lesson, open ended questions may be the best option to evaluate the student’s understanding of the subject matter.

If you are seeking to measure the student’s reasoning skills, analysis skills, or general comprehension of a subject matter, consider selecting primarily multiple choice questions.

Or, for a varied approach, utilize a combination of all available test question types so that you can appeal to the learning strengths of any student on the exam.

Take into consideration both the objectives of the test and the overall time available for taking and scoring your tests when selecting the best format.

I am not sure that “multiple choice” should be the primary choice but I understand they are suggesting to avoid open-ended questions if you want to measure reasoning or analytic skills or general comprehension.

This bothers me a little. It seems to me, from reviewing the previous posts in this blog, that an open-ended question could measure those skills. The example that comes to mind is the question I had in botany about describing the cell types a pin might encounter when passing through a plant stem. That was an essay question measuring general comprehension of plant tissues.

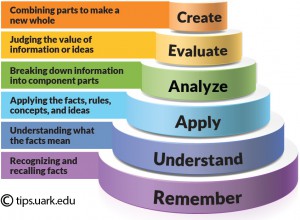

The following source brings up good points about analyzing the results. It also notes that objective tests, when “constructed imaginatively,” can test at higher levels of Bloom’s Taxonomy.

Source: http://www.calm.hw.ac.uk/GeneralAuthoring/031112-goodpracticeguide-hw.pdf

Objective tests are especially well suited to certain types of tasks. Because questions can be designed to be answered quickly, they allow lecturers to test students on a wide range of material. … Additionally, statistical analysis on the performance of individual students, cohorts and questions is possible.

The capacity of objective tests to assess a wide range of learning is often underestimated. Objective tests are very good at examining recall of facts, knowledge and application of terms, and questions that require short text or numerical responses. But a common worry is that objective tests cannot assess learning beyond basic comprehension.

However, questions that are constructed imaginatively can challenge students and test higher learning levels. For example, students can be presented with case studies or a collection of data (such as a set of medical symptoms) and be asked to provide an analysis by answering a series of questions…

Problem solving can also be assessed with the right type of questions. …

A further worry is that objective tests result in inflated scores due to guessing. However, the effects of guessing can be eliminated through a combination of question design and scoring techniques. With the right number of questions and distracters, distortion through guessing becomes largely irrelevant. Alternatively, guessing can be encouraged and measured if this is thought to be a desirable skill.

There are, however, limits to what objective tests can assess. They cannot, for example, test the competence to communicate, the skill of constructing arguments or the ability to offer original responses. Tests must be carefully constructed in order to avoid the decontextualisation of knowledge (Paxton 1998) and it is wise to use objective testing as only one of a variety of assessment methods within a module. However, in times of growing student numbers and decreasing resources, objective testing can offer a viable addition to the range of assessment types available to a teacher or lecturer.

I like their point about how objective tests cannot test competence to communicate, construct arguments, or offer original answers. Training our students to take only multiple choice tests (or simply answer “true” or “false”) does not help them to learn how to explain their thoughts or even ensure that they can write coherent sentences.

This is addressed by the second source and in previous posts. The suggestion is to use a variety of test item types. This can give you a better picture of what your students know, whereas using one single type can be biased against students who are not strong respondents to that type.

Recent Comments